On the left, Peter Sellers from the classic cold war satire “Dr. Strangelove or: How I Learned to Stop Worrying and Love the Bomb“. On the right, a side order of bacon. Here’s how I learned to love them both.

Let’s begin with science, or rather bad science. In fact, non-reproducibly bad science. From first rate science journalist Carl Zimmer:

C. Glenn Begley, who spent a decade in charge of global cancer research at the biotech giant Amgen, recently dispatched 100 Amgen scientists to replicate 53 landmark experiments in cancer—the kind of experiments that lead pharmaceutical companies to sink millions of dollars to turn the results into a drug. In March Begley published the results: They failed to replicate 47 of them.

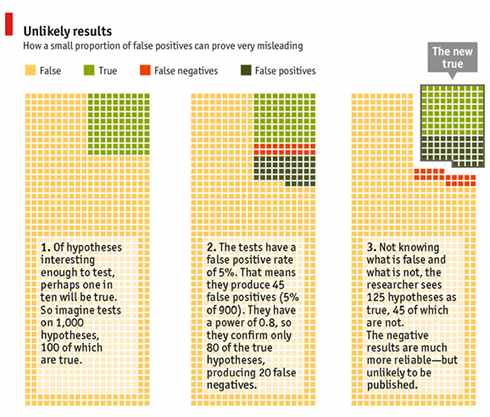

Zimmer goes on to cite John Ioannidis, author of the “game-changing 2005 paper” Why Most Published Research Findings Are False. My favorite take is from the Economist, who produced the infographic below. The article itself is gated, but an excellent ungated short video is worth a minute and a half of your time.

What the graphic shows is that even though we require a 95% data statistical confidence level to publish, the publishing process itself acts as a filter that causes a high percentage of false papers to get through. Commenting on the same article, Mark Liberman provides a more complete list of issues: “lack of replication, inappropriate use of ‘statistical significance’ testing, data dredging, the ‘file drawer effect’, inadequate documentation of experimental methods and data-analysis procedures, failure to publish raw data, the role of ambition and ideology, and so on.” Then Liberman goes on to say, “But all the same, I’m going to push back. The problems in science, though serious, are nothing new. And the alternative approaches to understanding and changing the world, including journalism, are much worse.”

Liberman is spot on in saying replication problems are not new. The reason is simple. Doing science is just really, really hard. It always has been. Papers that don’t pan out happen. That’s part of the scientific method. His pushback really appears to be directed at journalists for writing clickbait stories such as “Is there something wrong with the scientific method?” A fair enough complaint. With that said, Liberman’s wrong in downplaying the situation. The internet fueled drive towards open data, preprints, and the reproducibility movement itself are signs of the times. Science is in the process of a big, though slowly unfolding, self-correction. This is goodness and progress. When Carl Zimmer asked on twitter recently for #sciencewordoftheyear, “microbiome” was (deservedly in my view) a big favorite, but another obvious candidate was “irreproducible”:

Some fields have been harder hit than others. For example drug research, as mentioned in Zimmer’s story above. Another is psychological priming, where exposure to a stimulus, such as words associated with being old, influences later behavior, such as walking slowly down a hallway. In fact that foundational priming study with old words/slow walk is now being heatedly debated. Nobel Prize winner Daniel Khanamen published an open letter with a much quoted line “I see a train wreck coming”, which also singled out priming. And a recent attempt at replication for 15 studies in psychology found 13 reproduced fine, but 2 did not. Both of the non-reproduced studies involved priming. Everyone reporting on this is being cautious, and rightfully so. But priming is taking a pretty hard hit. In the end, science is a social endeavor, like any other human activity. We should not be shocked to discover replicability problems come disproportionately from fields that inadvertently went down certain paths.

I got interested in scientific methodological problems after reading Gary Taubes’ book Why We Get Fat. Taubes argues we get fat by eating refined carbs. Taubes singled out “observational studies” as being particularly bad science. These studies follow a cohort for a length of time, and then after the fact analyze the data looking for correlations. He blames the wide use of observational studies for the sorry state of nutritional science. Here’s Taubes doing a take down of a recent observational study on red meat. This kind of after the fact fishing expedition stands in contrast to the expensive gold standard: placebo-controlled, double-blind trials. While I’m convinced by Taubes, it’s important to caveat that blaming refined carbs for people getting fat isn’t widely accepted. Though I think the consensus is shifting in that direction. For more detail see my low carb posts: part 1 and part 2. Speaking of red meat, let’s talk bacon. After reading Taubes I learned to stopped worrying and have it all the time. So tasty.

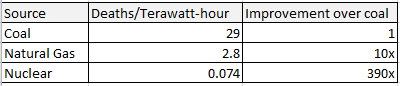

Next up: nuclear power. Last week’s post argued “Coal is so bad it makes fracking good.” I focused more on fracking than nukes on purpose, though the post included the chart below (derived from this paper):

Why avoid nukes? Well, it’s hard to have a rational conversation about it. But this misses a larger point. People do not become passionate about saving the planet because their calculator told them to. There’s no selfish motivation. We can understand environmentalism as an (admittedly mild) type of secular religion. Sorting your trash is a type of sacrament. You do it to be a good person. We see this everywhere in life. Volunteer work. Corporate culture, for both good and bad. People long to be emotionally bound to a larger ideal and purpose.

But nukes have an additional problem compared to fracking. Reactors can have catastrophic meltdowns. And people have a flawed heuristic where they unfortunately weigh spectacular and memorable events out of proportion. A good analogy is air travel versus car travel. Plane crashes are big news. Car crashes are not. So people fear air travel more. How do we get around this human tendency? By making air travel 10x safer per mile than car travel. If we want nuclear power, we need to accept human nature. Conceptually we could add another column to the table above, scaling back nuclear power’s death advantage by 10x. Nuclear is still better, but not by as much once you understand the need for a higher standard.

So what about the science and replicability? How risky are nukes? Start with the well established. The worst nuclear disaster of all time was Chernobyl, which led to 56 direct deaths. This includes not just people who died right away, but also deaths from thyroid cancers that show up clearly in the stats. Fukishima had no direct deaths. But direct deaths are tiny. That’s not where the action is. The controversy comes when estimating indirect deaths from very low levels of radiation exposure. For Chernobyl these estimates range from 4000 (World Health Organization) to 93,000 (Greenpeace).

The first thing to realize is we’re talking a very small risk increase for a very large number of people. So for example the calculated 4000 additional cancer deaths come from a population of about 600,000 with the highest exposure. A common analogy is to compare the additional risk for low level exposure at Chernobyl to what you’d get from a CT scan. Or the additional risk if you traveled on a long vacation. The second thing to realize is the baseline cancer rate is variable enough, and the amount of increase so small, that it is not statistically possible to measure it. That is to say, even at a theoretical level, we know it’s impossible to scientifically establish whether the low level radiation death estimates are correct. Hence the bickering. And why I’m including nukes in this post about replicability in science.

So if the effect is so small you can’t measure it even in theory, how do you do it? Well, you extrapolate linearly from higher levels of dosage, whose impacts are measurable. This is called the “Linear no-threshold model“, usually abbreviated as the LNT model. The linear part is clear enough. If you receive 1/2 the dosage you get 1/2 the risk. 1/100 dosage gives 1/100 the risk. And so on. At higher levels of radiation dosage the the theory can be tested empirically. The linear scaling works well enough. But the no-threshold part needs explaining. No-threshold means that there is no lower limit as to what is safe. So even when you get down to levels that match the natural background levels on Earth, which evolution says we’re naturally adapted to, in theory LNT still calculates an impact. This no-threshold part of LNT is of course hotly contested. Especially as it’s calculating effects so small you can’t even pull them out of the data. What LNT does is extend a scientific model into an area where it’s impossible to know if it’s true or false. I can see why people do it. It’s perhaps better than nothing. But it’s speculative. The reproducibility police would not approve.

So like many science buffs, I think on balance science supports the nukes. Fewer dead people. Less carbon and global warming. But we can’t expect environmental purists to be happy about it. If our addiction to carbon is likened to heroin, hard core environmentalists want to go cold turkey now. Letting the addict smoke cigarettes (nukes or natural gas) to help get off heroin (coal) is not going to cut it. Only cold turkey renewable perfection is acceptable. And this is as it should be. Utopian zeal is where progress comes from. Though I think the movement could use a few more pragmatists like Bjørn Lomborg.

What’s also worth noting is support for nuclear power is stronger on the right than the left. So this is an unusual case where the left is arguably more antiscience than the right. I’ve written about what drives science denalism before, using a frame from Jonathan Haidt. Our moral passions tribally bind us together, but blind us to anything contradicting our sacred beliefs. So following Haidt’s logic, my own advocacy of science, nukes and bacon probably reveals a bit of misguided Dr Strangelove passion as well. Perhaps the lesson is to stop worrying and enjoy it.

I believe the Priming theory. So if you test Priming on me, what result would you get? This imo, is the problem with psychology. Especially since placebo effect has increased in intensity recently, according to Science. Although Feynman thought the problem with psychology, when he was in his tyro/multidisciplinary phase, was that they test multiple variables at the same time.